ver

Engineers integrate internal robotic tactile sensors

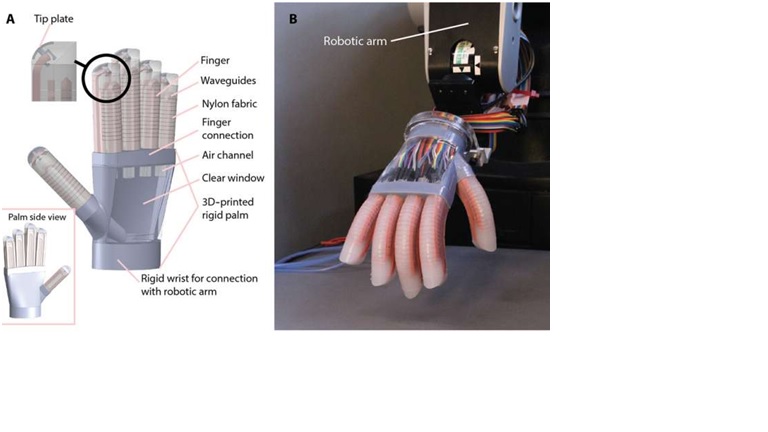

Doctoral student Shuo Li shakes hands with an optoelectronically innervated prosthesis. Credit: Huichan Zhao/Provided Most robots achieve grasping and tactile sensing through motorized means, which can be excessively bulky and rigid. A Cornell group has devised a way for a soft robot to feel its surroundings internally, in much the same way humans do.

![]()

Shepherd's group employed a four-step soft lithography process to produce the core (through which light propagates), and the cladding (outer surface of the waveguide), which also houses the LED (light-emitting diode) and the photodiode.

The more the prosthetic hand deforms, the more light is lost through the core. That variable loss of light, as detected by the photodiode, is what allows the prosthesis to "sense" its surroundings.

"If no light was lost when we bend the prosthesis, we wouldn't get any information about the state of the sensor," Shepherd said. "The amount of loss is dependent on how it's bent."

(A) Schematic of hand structure and components; (B) image of the fabricated hand mounted on a robotic arm with each finger actuated at ΔP = 100 kPa. Credit: Cornell University

The group used its optoelectronic prosthesis to perform a variety of tasks, including grasping and probing for both shape and texture. Most notably, the hand was able to scan three tomatoes and determine, by softness, which was the ripest.

![]()

Zhao said this technology has many potential uses beyond prostheses, including bio-inspired robots, which Shepherd has explored along with Mason Peck, associate professor of mechanical and aerospace engineering, for use in space exploration.

"That project has no sensory feedback," Shepherd said, referring to the collaboration with Peck, "but if we did have sensors, we could monitor in real time the shape change during combustion [through water electrolysis] and develop better actuation sequences to make it move faster."

Future work on optical waveguides in soft robotics will focus on increased sensory capabilities, in part by 3-D printing more complex sensor shapes, and by incorporating machine learning as a way of decoupling signals from an increased number of sensors. "Right now," Shepherd said, "it's hard to localize where a touch is coming from."

![]() Explore further: 'Octopus-like' skin can stretch, sense touch, and emit light (w/ Video)

Explore further: 'Octopus-like' skin can stretch, sense touch, and emit light (w/ Video)

More information: Huichan Zhao et al. Optoelectronically innervated soft prosthetic hand via stretchable optical

Topic: Home

Date: 30/12/2015